We seem to need foundational narratives.

Big picture stories that make sense of history’s bacchanal march into the apocalypse.

Broad-stroke predictions about how artificial intelligence (AI) will shape the future of humanity made by those with power arising from knowledge, money, and/or social capital.[1] Knowledge, as there still aren’t actually that many real-deal machine learning researchers in the world (despite the startling growth in paper submissions to conferences like NIPS), people who get excited by linear algebra in high-dimension spaces (the backbone of deep learning) or the patient cataloguing of assumptions required to justify a jump from observation to inference.[2] Money, as income inequality is a very real thing (and a thing too complex to say anything meaningful about in this post). For our purposes, money is a rhetoric amplifier, be that from a naive fetishism of meritocracy, where we mistakenly align wealth with the ability to figure things out better than the rest of us,[3] or cynical acceptance of the fact that rich people work in private organizations or public institutions with a scope that impacts a lot of people. Social capital, as our contemporary Delphic oracles spread wisdom through social networks, likes and retweets governing what we see and influencing how we see (if many people, in particular those we want to think like and be like, like something, we’ll want to like it too), our critical faculties on amphetamines as thoughtful consideration and deliberation means missing the boat, gut invective the only response fast enough to keep pace before the opportunity to get a few more followers passes us by, Delphi sprouting boredom like a 5 o’clock shadow, already on to the next big thing. Ironic that big picture narratives must be made so hastily in the rat race to win mindshare before another member of the Trump administration gets fired.

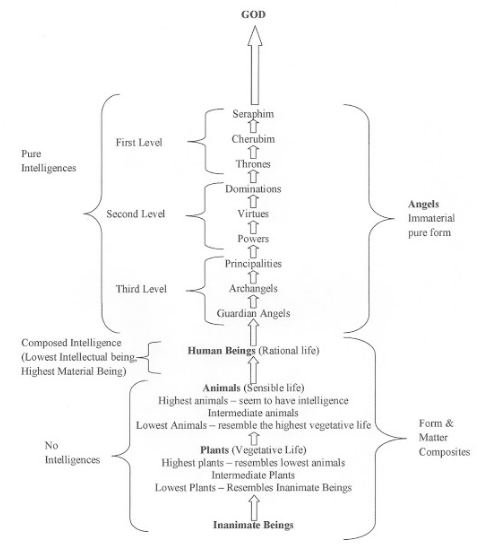

Most foundational narratives about the future of AI rest upon an implicit hierarchy of being that has been around for a long time. While proffered by futurists and atheists, the hierarchy dates back to the Great Chain of Being that medieval Christian theologists like Thomas Aquinas built to cut the physical and spiritual world into analytical pieces, applying Aristotelian scientific rigor to the spiritual topics.

The hierarchy provides a scale from inanimate matter to immaterial, pure intelligence. Rocks don’t get much love on the great chain of being, even if they carry the wisdom and resilience of millions of years of existence, contain, in their sifting shifting grain of sands, the secrets of fragility and the whispered traces of tectonic plates and sunken shores. Plants get a little more love than rocks, and apparently Venus fly traps (plants that resemble animals?) get more love than, say, yeast (if you’re a fellow member of the microbiome-issue club, you like me are in total awe of how yeast are opportunistic sons of bitches who sense the slightest shift in pH and invade vulnerable tissue with the collective force of stealth guerrilla warriors). Humans are hybrids, half animal, half rational spirit, our sordid materiality, our silly mortality, our mechanical bodies ever weighting us down and holding us back from our real potential as brains in vats or consciousnesses encoded to live forever in the flitting electrons of the digital universe. There are a shit ton of angels. Way more angel castes than people castes. It feels repugnant to demarcate people into classes, so why not project differences we live day in and day out in social interactions onto angels instead? And, in doing so, basically situate civilized aristocrats as closer to God than the lower and more animalistic members of the human race? And then God is the abstract patriarch on top of it all, the omnipotent, omniscient, benevolent patriarch who is also the seat of all our logical paradoxes, made of the same stuff as Gödel’s incompleteness theorem, the guy who can be at once father and son, be the circle with the center everywhere and the circumference nowhere, the master narrator who says, don’t worry, I got this, sure that hurricane killed tons of people, sure it seems strange that you can just walk into a store around the corner a buy a gun and there are mass shootings all the time, but trust me, if you could see the big picture like I see the big picture, you’d get how this confusing pain will actually result in the greatest good to the most people.

I’m going to be sloppy here and not provide hyperlinks to specific podcasts or articles that endorse variations of this hierarchy of being: hopefully you’ve read a lot of these and will have sparks of recognition with my broad stroke picture painting.[4] But what I see time and again are narratives that depict AI within a long history of evolution moving from unicellular prokaryotes to eukaryotes to slime to plants to animals to chimps to homo erectus to homo sapiens to transhuman superintelligence as our technology changes ever more quickly and we have a parallel data world where leave traces of every activity in sensors and clicks and words and recordings and images and all the things. These big picture narratives focus on the pre-frontal cortex as the crowning achievement of evolution, man distinguished from everything else by his ability to reason, to plan, to overcome the rugged tug of instinct and delay gratification until the future, to make guesses about the probability that something might come to pass in the future and to act in alignment with those guesses to optimize rewards, often rewards focused on self gain and sometimes on good across a community (with variations). And the big thing in this moment of evolution with AI is that things are folding in on themselves, we no longer need to explicitly program tools to do things, we just store all of human history and knowledge on the internet and allow optimization machines to optimize, reconfiguring data into information and insight and action and getting feedback on these actions from the world according to the parameters and structure of some defined task. And some people (e.g., Gary Marcus or Judea Pearl) say no, no, these bottom up stats are not enough, we are forgetting what is actually the real hallmark of our pre-frontal cortex, our ability to infer causal relationships between phenomena A and phenomena B, and it is through this appreciation of explanation and cause that we can intervene and shape the world to our ends or even fix injustices, free ourselves from the messy social structures of the past and open up the ability to exercise normative agency together in the future (I’m actually in favor of this kind of thinking). So we evolve, evolve, make our evolution faster with our technology, cut our genes crisply and engineer ourselves to be smarter. And we transcend the limitations of bodies trapped in time, transcend death, become angel as our consciousness is stored in the quick complexity of hardware finally able to capture plastic parallel processes like brains. And inch one step further towards godliness, ascending the hierarchy of being. Freeing ourselves. Expanding. Conquering the march of history, conquering death with blood transfusions from beautiful boys, like vampires. Optimizing every single action to control our future fate, living our lives with the elegance of machines.

It’s an old story.

Many science fiction novels feel as epic as Disney movies because they adapt the narrative scaffold of traditional epics dating back to Homer’s Iliad and Odyssey and Virgil’s Aeneid. And one epic quite relevant for this type of big picture narrative about AI is John Milton’s Paradise Lost, the epic to end all epics, the swan song that signaled the shift to the novel, the fusion of Genesis and Rome, an encyclopedia of seventeenth-century scientific thought and political critique as the British monarchy collapsed under the rushing sword of Oliver Cromwell.

Most relevant is how Milton depicts the fall of Eve.

Milton lays the groundwork for Eve’s fall in Book Five, when the archangel Raphael visits his friend Adam to tell him about the structure of the universe. Raphael has read his Aquinas: like proponents of superintelligence, he endorses the great chain of being. Here’s his response to Adam when the “Patriarch of mankind” offers the angel mere human food:

Raphael basically charts the great chain of being in the passage. Angels think faster than people, they reason in intuitions while we have to break things down analytically to have any hope of communicating with one another and collaborating. Daniel Kahnemann’s partition between discursive and intuitive thought in Thinking, Fast and Slow had an analogue in the seventeenth century, where philosophers distinguished the slow, composite, discursive knowledge available in geometry and math proofs from the fast, intuitive, social insights that enabled some to size up a room and be the wittiest guest at a cocktail party.

Raphael explains to Adam that, through patient, diligent reasoning and exploration, he and Eve will come to be more like angels, gradually scaling the hierarchy of being to ennoble themselves. But on the condition that they follow the one commandment never to eat the fruit from the forbidden tree, a rule that escapes reason, that is a dictum intended to remain unexplained, a test of obedience.

But Eve is more curious than that and Satan uses her curiosity to his advantage. In Book Nine, Milton fashions Satan in his trappings as snake as a master orator who preys upon Eve’s curiosity to persuade her to eat of the forbidden fruit. After failing to exploit her vanity, he changes strategies and exploits her desire for knowledge, basing his argument on an analogy up the great chain of being:

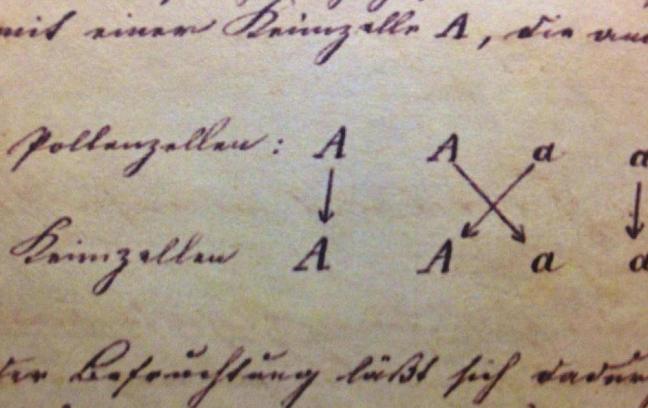

Satan exploits Eve’s mental model of the great chain of being to tempt her to eat the forbidden apple. Mere animals, snakes can’t talk. A talking snake, therefore, must have done something to cheat the great chain of being, to elevate itself to the status of man. So too, argues Satan, can Eve shortcut her growth from man to angel by eating the forbidden fruit. The fall of mankind rests upon our propensity to rely on analogy. May the defenders of causal inference rejoice.[5]

The point is that we’ve had a complex relationship with our own rationality for a long time. That Judeo-Christian thought has a particular way of personifying the artifacts and precipitates of abstract thoughts into moral systems. That, since the scientific revolution, science and religion have split from one another but continue to cross paths, if only because they both rest, as Carlo Rovelli so beautifully expounds in his lyrical prose, on our wonder, on our drive to go beyond the immediately visible, on our desire to understand the world, on our need for connection, community, and love.

But do we want to limit our imaginations to such a stale hierarchy of being? Why not be bolder and more futuristic? Why not forget gods and angels and, instead, recognize these abstract precipitates as the byproducts of cognition? Why not open our imaginations to appreciate the radically different intelligence of plants and rocks, the mysterious capabilities of photosynthesis that can make matter from sun and water (WTF?!?), the communication that occurs in the deep roots of trees, the eyesight that octopuses have all down their arms, the silent and chameleon wisdom of the slit canyons in the southwest? Why not challenge ourselves to greater empathy, to the unique beauty available to beings who die, capsized by senescence and always inclining forward in time?

Why not free ourselves of the need for big picture narratives and celebrate the fact that the future is far more complex than we’ll ever be able to predict?

How can we do this morally? How can we abandon ourselves to what will come and retain responsibility? What might we build if we mimic animal superintelligence instead of getting stuck in history’s linear march of progress?

I believe there would be beauty. And wild inspiration.

[1] This note should have been after the first sentence, but I wanted to preserve the rhetorical force of the bare sentences. My friend Stephanie Schmidt, a professor at SUNY Buffalo, uses the concept of foundational narratives extensively in her work about colonialism. She focuses on how cultures subjugated to colonial power assimilate and subvert the narratives imposed upon them.

[2] Yesterday I had the pleasure of hearing a talk by the always-inspiring Martin Snelgrove about how to design hardware to reduce energy when using trained algorithms to execute predictions in production machine learning. The basic operations undergirding machine learning are addition and multiplication: we’d assume multiplying takes more energy than adding, because multiplying is adding in sequence. But Martin showed how it all boils down to how far electrons need to travel. The broad-stroke narrative behind why GPUs are better for deep learning is that they shuffle electrons around criss-cross structures that look like matrices as opposed to putting them into the linear straight-jacket of the CPU. But the geometry can get more fine-grained and complex, as the 256×256 array in Google’s TPU shows. I’m keen to dig into the most elegant geometry for designing for Bayesian inference and sampling from posterior distributions.

[3] Technology culture loves to fetishize failure. Jeremy Epstein helped me realize that failure is only fun if it’s the mid point of a narrative that leads to a turn of events ending with triumphant success. This is complex. I believe in growth mindsets like Ray Dalio proposes in his Principles: there is real, transformative power in shifting how our minds interpret the discomfort that accompanies learning or stretching oneself to do something not yet mastered. I jump with joy at the opportunity to transform the paralyzing energy of anxiety into the empowering energy of growth, and believe its critical that more women adopt this mindset so they don’t hold themselves back from positions they don’t believe they are qualified for. Also, it makes total sense that we learn much, much more from failures than we do from successes, in science, where it’s important to falsify, as in any endeavor where we have motivation to change something and grow. I guess what’s important here is that we don’t reduce our empathy for the very real pain of being in the midst of failure, of not feeling like one doesn’t have what other have, of being outside the comfort of the bell curve, of the time it takes to outgrow the inheritance and pressure from the last generation and the celebrations of success. Worth exploring.

[4] One is from Tim Urban, as in this Google Talk about superintelligence. I really, really like Urban’s blog. His recent post about choosing a career is remarkably good and his Ted talk on procrastination is one of my favorite things on the internet. But his big picture narrative about AI irks me.

[5] Milton actually wrote a book about logic and was even a logic tutor. It’s at once incredibly boring and incredibly interesting stuff.

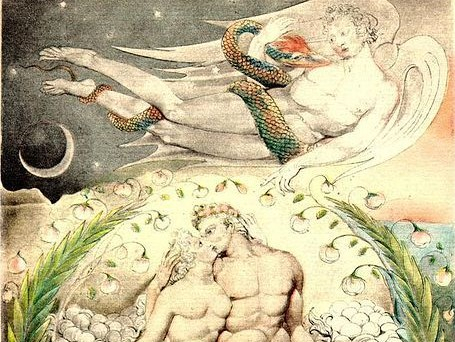

The featured image is the 1808 Butts Set version of William Blake’s “Satan Watching the Endearments of Adam and Eve.” Blake illustrated many of Milton’s works and illustrated Paradise Lost three times, commissioned by three different patrons. The color scheme is slightly different between the Thomas, Butts, and Linnell illustration sets. I prefer the Butts. I love this image. In it, I see Adam relegated to a supporting actor, a prop like a lamp sitting stage left to illuminate the real action between Satan and Eve. I feel empathy for Satan, want to ease his loneliness and forgive him for his unbridled ambition, as he hurdles himself tragically into the figure of the serpent to seduce Eve. I identify with Eve, identify with her desire for more, see through her eyes as they look beyond the immediacy of the sexual act and search for transcendence, the temptation that ultimately leads to her fall. The pain we all go through as we wise into acceptance, and learn how to love.