Not every event in your life has had profound significance for you. There are a few, however, that I would consider likely to have changed things for you, to have illuminated your path. Ordinarily, events that change our path are impersonal affairs, and yet are extremely personal. - Don Juan Matus, a (potentially fictional) Yaqui shaman from Mexico

The windowless classroom was dark. We were sitting around a rectangular table looking at a projection of Rembrandt’s Syndics of the Drapers’ Guild. Seated opposite the projector, I could see student faces punctuate the darkness, arching noses and blunt hair cuts carving topography through the reddish glow.

“What do you see?”

Barbara Stafford’s voice had the crackly timbre of a Pablo Casals record and her burnt-orange hair was bi-toned like a Rothko painting. She wore downtown attire, suits far too elegant for campus with collars that added movement and texture to otherwise flat lines. We were in her Art History 101 seminar, an option for University of Chicago undergrads to satisfy a core arts & humanities requirement. Most of us were curious about art but wouldn’t major in art history; some wished they were elsewhere. Barbara knew this.

“A sort of darkness and suspicion,” offered one student.

“Smugness in the projection of power,” added another.

“But those are interpretations! What about the men that makes them look suspicious or smug? Start with concrete details. What do you see?”

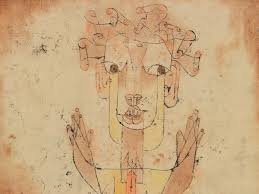

No one spoke. For some reason this was really hard. It didn’t occur to anyone to say something as literal as “I see a group of men, most of whom have long, curly, light-brown hair, in black robes with wide-brimmed tall black hats sitting around a table draped with a red Persian rug in the daytime.” Too obvious, like posing a basic question about a math proof (where someone else inevitably poses the question and the professor inevitably remarks how great a question it is to our curious but proud dismay). We couldn’t see the painting because we were too busy searching for a way of seeing that would show others how smart we were.

“Katie, you’re our resident fashionista. What strikes you about their clothing?”

Adrenaline surged. I felt my face glow in the reddish hue of the projector, watched others’ faces turn to look at mine, felt a mixture of embarrassment at being tokenized as the student who cared most about clothes and appearance and pride that Barbara found something worth noticing, in particular given her own evident attention to style. Clothes weren’t just clothes for me: they were both art and protection. The prospect of wearing the same J Crew sweater or Seven jeans as another girl had been cruelly beaten out of me in seventh grade, when a queen mean girl snidely asked, in chemistry class, if I knew that she had worn the exact same salmon-colored Gap button-down crew neck cotton sweater, simply in the cream color, the day before. My mom had gotten me the sweater. All moms got their kids Gap sweaters in those days. The insinuation was preposterous but stung like a wasp: henceforth I felt a tinge of awkwardness upon noticing another woman wearing an article of clothing I owned. In those days I wore long ribbons in my ponytails to make my hair seem longer than it was, like extensions. I often wore scarves, having admired the elegance of Spanish women tucking silk scarves under propped collared shirts during my senior year of high school abroad in Burgos, Spain. Material hung everywhere around me. I liked how it moved in the wind and encircled me in the grace I feared I lacked.

“I guess the collars draw your attention. The three guys sitting down have longer collars. They look like bibs. The collar of the guy in the middle is tied tight, barely any space between the folds. A silver locket emerges from underneath. The collars of the two men to his left (and our right) billow more, they’re bunchy, as if those two weren’t so anal retentive when they get dressed in the morning. They also have kinder expressions, especially the guy directly to the left of the one in the center. And then it’s as if the collars of the men standing to the right had too much starch. They’re propped up and overly stiff, caricature stiff. You almost get the feeling Rembrandt added extra air to these puffed up collars to make a statement about the men having their portrait done. Like, someone who had taste and grace wouldn’t have a collar that was so visibly puffy and stiff. Also, the guy in the back doesn’t have a hat like the others.”

Barbara glowed. I’d given her something to work with, a constraint from which to create a world. I felt like I’d just finished a performance, felt the adrenaline subside as students’ turned their heads back to face the painting again, shifted their attention to the next question, the next comment, the next brush stroke in Syndics of the Drapers’ Guild.

After a few more turns goading students to describe the painting, Barbara stepped out of her role as Socrates and told us about the painting’s historical context. I don’t remember what she said or how she looked when she said it. I don’t remember every class with her. I do remember a homework assignment she gave inspired by André Breton’s objet trouvé, a surrealist technique designed to get outside our standard habits of perception, to let objects we wouldn’t normally see pop into our attention. I wrote about my roommate’s black high-heeled shoes and Barbara could tell I was reading Nietzsche’s Birth of Tragedy because I kept referencing Apollo and Dionysus, godheads for constructive reason and destructive passion, entropy pulling us ever to our demise.[1] I also remember a class where we studied Cindy Sherman photos, in particular her self portraits as Caravaggio’s Bacchus and her film still from Hitchcock’s Vertigo. We took a trip to the Chicago Art Institute and looked at few paintings together. Barbara advised us never to use the handheld audio guides as they would pollute our vision. We had to learn how to trust ourselves and observe the world like scientists.

In the fourth paragraph of the bio on her personal website, Barbara says that “she likes to touch the earth without gloves.” She explains that this means she doesn’t just write about art and how we perceive images, but also “embodies her ideas in exhibitions.”

I interpret the sentence differently. To touch the earth without gloves is to see the details, to pull back the covers of intentionality and watch as if no one were watching. Arts and humanities departments are struggling to stay relevant in an age where we value computer science, mathematics, and engineering. But Barbara didn’t teach us about art. She taught us how to see, taught us how to make room for the phenomenon in front of us. Paintings like Rembrandt’s Syndics of the Drapers’ Guild were a convenient vehicle for training skills that can be transferred and used elsewhere, skills which, I’d argue, are not only relevant but essential to being strong leaders, exacting scientists, and respectful colleagues. No matter what field we work in, we must all work all the time to notice our cognitive biases, the ever-present mind ghosts that distort our vision. We must make room for observation. Encounter others as they are, hear them, remember their words, watch how their emotions speak through the slight curl of their lips and the upturned arch of their eyebrows. Great software needs more than just engineering and science: it needs designers who observe the world to identify features worth building.

I am indebted to Barbara for teaching me how to see. She is integral to the success I’ve had in my career in technology.

Of all the memories I could share about my college experience, why share this one? Why do I remember it so vividly? What makes this memory profound?

I recently read Carlos Casteñeda’s The Active Side of Infinity and resonated with book’s premise as “a collection of memorable events” Casteñeda recounts as an exercise to become a warrior-traveler like the shamans who lived in Mexico in ancient times. Don Juan Matus, a (potentially fictional) Yaqui shaman who plays the character of Casteñeda’s guru in most of his work, considers the album “an exercise in discipline and impartiality…an act of war.” On his first pass, Casteñeda picks out memories he assumes should be important in shaping him as an individual, events like getting accepted to the anthropology program at UCLA or almost marrying a Kay Condor. Don Juan dismisses them as “a pile of nonsense,” noting they are focused on his own emotions rather than being “impersonal affairs” that are nonetheless “extremely personal.”

The first story Casteñeda tells that don Juan deems fit for a warrior-traveler is about Madame Ludmilla, “a round, short woman with bleached-blond hair…wearing a a red silk robe with feathery, flouncy sleeves and red slippers with furry balls on top” who performs a grotesque strip tease called “figures in front of a mirror.” The visuals remind me of dream sequence from a Fellini movie, filled with the voluptuousness of wrinkled skin and sagging breasts and the brute force of the carnivalesque. Casteñeda’s writing is noticeably better when he starts telling Madame Ludmilla’s story: there’s more detail, more life. We can picture others, smell the putrid stench of dried vomit behind the bar, relive the event with Casteñeda and recognize a truth in what he’s lived, not because we’ve had the exact same experience, but because we’ve experienced something similar enough to meet him in the overtones. “What makes [this story] different and memorable,” explains don Juan, “is that it touches every one of us human beings, not just you.”

Don Juan calls this war because it requires discipline to see the world this way. Day in and day out, structures around us bid us to focus our attention on ourselves, to view the world through the prism of self-improvement and self-criticism: What do I want from this encounter? What does he think of me? When I took that action, did she react with admiration or contempt? Is she thinner than I am? Look at her thighs in those pants-if I keep eating desserts they way I do, my thighs will start to look like that too. I’ve fully adopted the growth mindset and am currently working on empathy: in that last encounter, I would only give myself a 4/10 on my empathy scale. But don’t you see that I’m an ESFJ? You have to understand my actions through the prism of my self-revealed personality guide! It’s as if we live in a self-development petri dish, where experiences with others are instruments and experiments to make us better. Everything we live, everyone we meet, and everything we remember gets distorted through a particular analytical prism: we don’t see and love others, we see them through the comparative machine of the pre-frontal cortex, comparing, contrasting, categorizing, evaluating them through the prism of how they help or hinder our ability to become the future self we aspire to become.

Warrior-travelers like don Juan fight against this tendency. Collecting an album of memorable events is a exercise in learning how to live differently, to change how we interpret our memories and first-person experiences. As non-warriors, we view memories as scars, events that shape our personality and make us who we are. As warriors, we view ourselves as instruments and vessels to perceive truths worth sharing, where events just so happen to happen to us so we can feel them deeply enough and experience the minute details required to share vivid details with others. Warriors are instruments of the universe, vessels for the universe to come to know itself. We can’t distort what others feel because we want them to like us or act a certain way because of us: we have to see others for who they are, make space for negative and positive emotions. What matters isn’t that we improve or succeed, but that we increase the range of what’s perceivable. Only then can we transmit information with the force required to heal or inspire. Only then are we fearless.

Don Juan’s ways of seeing and being weren’t all new to me (although there were some crazy ideas of viewing people as floating energy balls). There are sprinklings of my quest to live outside the self in many posts on the blog. Rather, The Active Side of Infinity helped me clarify why I share first-person stories in the first place. I don’t write to tell the world about myself or share experiences in an effort to shape my identity. This isn’t catharsis. I write to be a vessel, a warrior-traveller. To share what I felt and saw and smelled and touched as I lived experiences that I didn’t know would be important at the time but that have managed to stick around, like Argos, always coming back, somehow catalyzing feelings of love and gratitude as intense today as they were when I first experienced them. To use my experiences to illustrate things we are all likely to experience in some way or another. To turn memories into stories worth sharing, with details concrete enough that you, reader, can feel them, can relate to them, and understand a truth that, ill-defined and informal though it may be, is searing in its beauty.

This post features two excerpts from my warrior-traveler album, both from my time as an undergraduate at the University of Chicago. I ask myself: if I were speaking to someone for the first time and they asked me to tell them about myself, starting in college, would I share these memories? Likely not. But it’s a worthwhile to wonder if doing so might change the world for the good.

When I attended the University of Chicago, very few professors gave students long reading assignments for the first class. Some would share a syllabus, others would circulate a few questions to get us thinking. No one except Loren Kruger expected us to read half of Anna Karenina and be prepared to discuss Tolstoy’s use of literary from to illustrate 19th-century Russian class structures and ideology.

Loren was tall and big boned. A South African, she once commented on J.M. Coetzee’s startling ability to wield power through silence. She shared his quiet intensity, demanded such rigor and precision in her own work that couldn’t but demand it from others. The tiredness of the old world laced her eyes, but her work was about resistance; she wrote about Brecht breaking boundaries in theater, art as an iron-hot rod that could shed society’s tired skin and make room for something new. She thought email destroyed intimacy because the virtual distance emboldened students to reach out far more frequently than when they had to brave a face-to-face encounter. About fifteen students attended the first class. By the third class, there were only three of us. With two teaching assistants (a French speaker and a German speaker), the student:teacher ratio became one:one.[2]

Loren intimated me, too. The culture at the University of Chicago favored critical thinking and debate, so I never worried about whether my comments would offend others or come off as bitchy (at Stanford, sadly, this was often the case). I did worry about whether my ideas made sense. Being the most talkative student in a class of three meant I was constantly exposed in Loren’s class, subjecting myself to feedback and criticism. She criticized openly and copiously, pushing us for precision, depth, insight. It was tough love.

The first thing Loren taught me was the importance of providing concrete examples to test how well I understood a theory. We were reading Karl Marx, either The German Ideology or the first volume of Das Kapital.[3] I confidently answered Loren’s questions about the text, reshuffling Marx’s words or restating what he’d written in my own words. She then asked me to provide a real-world example of one of his theories. I was blank. Had no clue how to answer. I’d grown accustomed to thinking at a level of abstraction, riding text like a surfer rides the top of a wave without grounding the thoughts in particular examples my mind could concretely imagine.[4] The gap humbled me, changed how I test whether I understand something. This happens to be a critical skill in my current work in technology, given how much marketing and business language is high-level and general: teams think they are thinking the same thing, only to realize that with a little more detail they are totally misaligned.

We wrote midterm papers. I don’t remember what I wrote about but do remember opening the email with the grade and her comments, laptop propped on my knees and back resting against the powder-blue wall in my bedroom off the kitchen in the apartment on Woodlawn Avenue. B+. “You are capable of much more than this.” Up rang my old friend imposture syndrome: no, I’m not, what looks like eloquence in class is just a sham, she’s going to realize I’m not what she thinks I am, useless, stupid, I’ll never be able to translate what I can say into writing. I don’t know how. Tucked behind the fiddling furies whispered the faint voice of reason: You do remember that you wrote your paper in a few hours, right? That you were rushing around after the house was robbed for the second time and you had to move?

Before writing our final papers, we had to submit and receive feedback on a formal prospectus rather than just picking a topic. We’d read Franz Fanon’s The Wretched of the Earth and I worked with Dustin (my personal TA) to craft a prospectus analyzing Gillo Pontecorvo’s Battle of Algiers in light of some of Fanon’s descriptions of the experience of colonialism.[7]

Once again, Loren critiqued it harshly. This time I panicked. I didn’t want to disappoint her again, didn’t want the paper to confirm to both of us that I was useless, incompetent, unable to distill my thinking into clear and cogent writing. The topic was new to me and out of my comfort zone: I wasn’t an expert in negritude and or post-colonial critical theory. I wrote her a desperate email suggesting I write about Baudelaire and Adorno instead. I’d written many successful papers about French Romanticism and Symbolism and was on safer ground.

Her response to my anxious plea was one of the more meaningful interactions I’ve ever had with a professor.

Katie, stop thinking about what you’re going to write and just write. You are spending far too much energy worrying about your topic and what you might or might not produce. I am more than confident you are capable of writing something marvelous about the subject you’ve chosen. You’ve demonstrated that to me over the quarter. My critiques of your prospectus were intended to help you refine your thinking, not push you to work on something else. Just work!

I smiled a sigh of relief. No professor had ever said that to me before. Loren had paid attention, noticed symptoms of anxiety but didn’t placate or coddle me. She remained tough because she believed I could improve. Braved the mania. This interaction has had a longer-lasting impact on me than anything I learned about the subject matter in her class. I can call it to mind today, in an entirely different context of activity, to galvanize myself to get started when I’m anxious about a project at work.

The happiest moments writing my final paper about the Battle of Algiers were the moments describing what I saw in the film. I love using words to replay sequences of stills, love interpreting how the placement of objects or people in a still creates an emotional effect. My knack for doing so stems back to what I learned in Art History 101. I think I got an A on the paper. I don’t remember or care. What stays with me is my gratitude to Loren for not letting me give up, and the clear evidence she cared enough about me to put in the work required to help me grow.

[1] This isn’t the first time things I learned in Barbara’s class have made it into my blog. The objet trouvé exercise inspired a former blog post.

[2] I ended up having my own private teaching assistant, a French PhD named Dustin. He told me any self-respecting comparative literature scholar could read and speak both French and German fluently, inspiring me to spend the following year in Germany.

[3] I picked up my copy of The Marx-Engels Reader (MER) to remember what text we read in Loren’s class. I first read other texts in the MER in Classics of Social and Political Thought, a social sciences survey course that I took to fulfilled a core requirement (similar to Barbara’s Art History 101) my sophomore year. One thing that leads me to believe we read The German Ideology or volume one of Das Kapital in Loren’s class is the difference in my handwriting between years two and four of college. In year two, my handwriting still had round playfulness to it. The letters are young and joyful, but look like they took a long time to write. I remember noticing that my math professors all seemed to adopt a more compact and efficient font when they wrote proofs on the chalkboard: the a’s were totally sans-serif, loopless. Letters were small. They occupied little space and did what they could not to draw attention to themselves so the thinker could focus on the logic and ideas they represented. I liked those selfless a’s and deliberately changed my handwriting to imitate my math professors. The outcome shows in my MER. I apparently used to like check marks to signal something important: they show up next to straight lines illuminating passages to come back to. A few great notes in the margins are: “Hegelian->Too preoccupied w/ spirit coming to itself at basis…remember we are in (in is circled) world of material” and “Inauthenticity->Displacement of authentic action b/c always work for later (university/alienation w/ me?)”

[4] There has to be a ton of analytic philosophy ink spilled on this question, but it’s interesting to think about what kinds of thinking is advanced by pure formalisms that would be hampered by ties to concrete, imaginable referents and what kinds of thinking degrade into senseless mumbo jumbo without ties to concrete, imaginable referents. Marketing language and politically correct platitudes definitely fall into category two. One contemporary symptom of not knowing what one’s talking about is the abuse of the demonstrative adjective that. Interestingly enough, such demonstrative abusers never talk about thises, they only talk about thats. This may be used emphatically and demonstratively in a Twitter or Facebook conversation: when someone wholeheartedly supports a comment, critique, or example of some point, they’ll write This as a stand-alone sentence with super-demonstrative reference power, power strong enough to encompass the entire statement made before it. That’s actually ok. It’s referring to one thing, the thing stated just above it. It’s dramatic but points to something the listener/reader can also point to. The problem with the abused that is that it starts to refer to a general class of things that are assumed, in the context of the conversation, to have some mutually understood functional value: “To successfully negotiate the meeting, you have to have that presentation.” “Have that conversation — it’s the only way to support your D&I efforts!” Here, the listener cannot imagine any particular that that these words denote. The speaker is pointing to a class of objects she assumes the listener is also familiar with and agrees exist. A conversation about what? A presentation that looks like what? There are so many different kinds and qualities of conversations or presentations that could fit the bill. I hear this used all the time and cringe a little inside every time. I’m curious to know if others have the same reaction I do, or if I should update my grammar police to accept what has become common usage. Leibniz, on the other hand, was an early modern staunch defender of cogitatio caeca (Latin for blind thought), which referred to our ability to calculate and manipulate formal symbols and create truthful statements without requiring the halting step of imagining the concrete objects these symbols refer to. This, he argued against conservatives like Thomas Hobbes, was crucial to advance mathematics. There are structural similarities in the current debates about explainability of machine learning algorithms, even though that which is imagined or understood may lie on a different epistemological, ontological, and logical plane.

[5] People tell me that one reason they like my talks about machine learning is that I use a lot of examples to help them understand abstract concepts. Many talks are structured like this one, where I walk an audience through the decisions they would have to make as a cross-functional team collaborating on a machine learning application. The example comes from a project former colleagues worked on. I realized over the last couple of years that no matter how much I like public speaking, I am horrified by the prospect of specializing in speaking or thought leadership and not being actively engaged in the nitty-gritty, day-to-day work of building systems and observing first-person how people interact with them. I believe the existential horror stems from my deep-seated beliefs about language and communication, in my deep-seated discomfort with words that don’t refer to anything. Diving into this would be worthwhile: there’s a big difference between the fictional imagination, the ability to bring to life the concrete particularity of something or someone that doesn’t exist, and the vagueness of generalities lacking reference. The second does harm and breeds stereotypes. The first is not only potent in the realm of fiction, but, as my fiancé Mihnea is helping me understand, may well be one of the master skills of the entrepreneur and executive. Getting people aligned and galvanized around a vision can only occur if that vision is concrete, compelling, and believable. An imaginable state of the world we can all inhabit, even if it doesn’t exist yet. A tractable as if that has the power to influence what we do and how we behave today so as to encourage its creation and possibility.[6]

[6] I believe this is the first time I’ve had a footnote referring to another footnote (I did play around with writing an incorrigibly long photo caption in Analogue Repeaters). Funny this ties to the footnote just above (hello there, dear footnote!) and even funnier that footnote 4 is about demonstrative reference, including the this discursive reference. But it’s seriously another thought so I felt it merited it’s own footnote as opposed to being the second half of footnote 5. When I sat down to write this post, I originally planned to write about the curious and incredible potency of imagined future states as tools to direct action in the present. I’ve been thinking about this conceptual structure for a long time, having written about it in the context of seventeenth-century French philosophy, math, and literature in my dissertation. The structure has been around since the Greeks (Aristotle references it in Book III of the Nicomachean Ethics) and is used in startup culture today. I started writing a post on the topic in August, 2018. Here’s the text I found in the incomplete draft when I reopened it a few days ago:

A goal is a thinking tool.

A good goal motivates through structured rewards. It keeps people focused on an outcome, helps them prioritize actions and say no to things, and stretches them to work harder than they would otherwise. Wise people say that a good goal should be about 80% achievable. Wise leaders make time reward and recognize inputs and outputs.

A great goal reframes what’s possible. It is moonshot and requires the suspension of disbelief, the willingness to quiet all the we can’ts and believe something surreal, to sacrifice realism and make room for excellence. It assumes a future outcome that is so outlandish, so bold, that when you work backwards through the series of steps required to achieve it, you start to do great things you wouldn’t have done otherwise. Fools say that it doesn’t matter if you never come close to realizing a great goal, because the very act of supposing it could be possible and reorienting your compass has already resulted in concrete progress towards a slightly more reasonable but still way above average outcome.

Good goals create outcomes. Great goals create legacies.

This text alienates me. It reminds me of an inspirational business book: the syncopation and pace seem geared to stir pathos and excitement. How curious that the self evolves so quickly, that the I looking back on the same I’s creations of a few months ago feels like she is observing a stranger, someone speaking a different language and inhabiting a different world. But of course that’s the case. Of course being in a different environment shapes how one thinks and what one sees. And the lesson here is not one of fear around instability of character: it’s one that underlines to crucial importance of context, the crucial importance of taking care to select our surroundings so we fill our brains with thoughts and words that shape a world we find beautiful, a world we can call home. The other point of this footnote is a comment on the creative process. Readers may have noted the quotation from Pascal that accompanies all my posts: “The last thing one settles in writing a book is what one should put in first.” The joy of writing, for me, as for Mihnea and Kevin Kelly and many others, lies in unpacking an intuition, sitting down in front of a silent wall and a silent world to try to better understand something. I’m happiest when, writing fast, bad, and wrong to give my thoughts space to unfurl, I discover something I wouldn’t have discovered had I not written. Writing creates these thoughts. It’s possible they lie dormant with potential inside the dense snarl of an intuition and possible they wouldn’t have existed otherwise. Topic for another post. With this post, I originally intended to use the anecdote about Stafford’s class to show the importance of using concrete details, to illustrate how training in art history may actually be great training for the tasks of a leader and CEO. But as my mind circled around the structure that would make this kind of intro make sense, I was called to write about Casteñeda, pulled there by my emotions and how meaningful these memories of Barbara and Loren felt. I changed the topic. Followed the path my emotions carved for me. The process was painful and anxiety-inducing. But it also felt like the kind of struggle I wanted to undertake and live through in the service of writing something worth reading, the purpose of my blog.

[7] About six months ago, I learned that an Algerian taxi driver in Montréal was the nephew of Ali La Pointe, the revolutionary martyr hero in Battle of Algiers. It’s possible he was lying, but he was delighted by the fact that I’d seen and loved the film and told me about the heroic deeds of another uncle who didn’t have the same iconic stardom as Ali. Later that evening I attended a dinner hosted by Element AI and couldn’t help but tell Yoshua Bengio about the incredible conversation I had in the taxi cab. He looked at me with confusion and discomfort, put somewhat out of place and mind by my not accommodating the customary rules of conversation with acquaintances.

The featured image is the Syndics of the Drapers’ Guild, which Rembrandt painted in 1662. The assembled drapers assess the quality of different weaves and cloths, presumably, here, assessing the quality of the red rug splayed over the table. In Ways of Seeing, John Berger writes about how oil paintings signified social status in the early modern period. Having your portrait done showed you’d made it, the way driving a Porsche around town would do so today. When I mentioned that the collars seemed a little out of place, Barbara Stafford found the detail relevant precisely because of the plausibility that Rembrandt was including hints of disdain and critique in the commissioned portraits, mocking both his subjects and his dependence on them to get by.