That familiar discomfort of wanting to write but not feeling ready yet.*

(The default voice pops up in my brain: “Then don’t write! Be kind to yourself! Keep reading until you understand things fully enough to write something cogent and coherent, something worth reading.”

The second voice: “But you committed to doing this! To not write** is to fail.***”

The third voice: “Well gosh, I do find it a bit puerile to incorporate meta-thoughts on the process of writing so frequently in my posts, but laziness triumphs, and voilà there they come. Welcome back. Let’s turn it to our advantage one more time.”)

This time the courage to just do it came from the realization that “I don’t understand this yet” is interesting in itself. We all navigate the world with different degrees of knowledge about different topics. To follow Wilfred Sellars, most of the time we inhabit the manifest image, “the framework in terms of which man came to be aware of himself as man-in-the-world,” or, more broadly, the framework in terms of which we ordinarily observe and explain our world. We need the manifest image to get by, to engage with one another and not to live in a state of utter paralysis, questioning our every thought or experience as if we were being tricked by the evil genius Descartes introduces at the outset of his Meditations (the evil genius toppled by the clear and distinct force of the cogito, the I am, which, per Dan Dennett, actually had the reverse effect of fooling us into believing our consciousness is something different from what it actually is). Sellars contrasts the manifest image with the scientific image: “the scientific image presents itself as a rival image. From its point of view the manifest image on which it rests is an ‘inadequate’ but pragmatically useful likeness of a reality which first finds its adequate (in principle) likeness in the scientific image.” So we all live in this not quite reality, our ability to cooperate and coexist predicated pragmatically upon our shared not-quite-accurate truths. It’s a damn good thing the mess works so well, or we’d never get anything done.

Sellars has a lot to say about the relationship between the manifest and scientific images, how and where the two merge and diverge. In the rest of this post, I’m going to catalogue my gradual coming to not-yet-fully understanding the relationship between mathematical machine learning models and the hardware they run on. It’s spurring my curiosity, but I certainly don’t understand it yet. I would welcome readers’ input on what to read and to whom to talk to change my manifest image into one that’s slightly more scientific.

So, one common thing we hear these days (in particular given Nvidia’s now formidable marketing presence) is that graphical processing units (GPUs) and tensor processing units (TPUs) are a key hardware advance driving the current ubiquity in artificial intelligence (AI). I learned about GPUs for the first time about two years ago and wanted to understand why they made it so much faster to train deep neural networks, the algorithms behind many popular AI applications. I settled with an understanding that the linear algebra-operations we perform on vectors, strings of numbers oriented in a direction in an n-dimensional space-powering these applications is better executed on hardware of a parallel, matrix-like structure. That is to say, properties of the hardware were more like properties of the math: they performed so much more quickly than a linear central processing unit (CPU) because they didn’t have to squeeze a parallel computation into the straightjacket of a linear, gated flow of electrons. Tensors, objects that describe the relationships between vectors, as in Google’s hardware, are that much more closely aligned with the mathematical operations behind deep learning algorithms.

There are two levels of knowledge there:

- Basic sales pitch: “remember, GPU = deep learning hardware; they make AI faster, and therefore make AI easier to use so more possible!”

- Just above the basic sales pitch: “the mathematics behind deep learning is better represented by GPU or TPU hardware; that’s why they make AI faster, and therefore easier to use so more possible!”

At this first stage of knowledge, my mind reached a plateau where I assumed that the tensor structure was somehow intrinsically and essentially linked to the math in deep learning. My brain’s neurons and synapses had coalesced on some local minimum or maximum where the two concepts where linked and reinforced by talks I gave (which by design condense understanding into some quotable meme, in particular in the age of Twitter…and this requirement to condense certainly reinforces and reshapes how something is understood).

In time, I started to explore the strange world of quantum computing, starting afresh off the local plateau to try, again, to understand new claims that entangled qubits enable even faster execution of the math behind deep learning than the soddenly deterministic bits of C, G, and TPUs. As Ivan Deutsch explains this article, the promise behind quantum computing is as follows:

In a classical computer, information is stored in retrievable bits binary coded as 0 or 1. But in a quantum computer, elementary particles inhabit a probabilistic limbo called superposition where a “qubit” can be coded as 0 and 1.

Here is the magic: Each qubit can be entangled with the other qubits in the machine. The intertwining of quantum “states” exponentially increases the number of 0s and 1s that can be simultaneously processed by an array of qubits. Machines that can harness the power of quantum logic can deal with exponentially greater levels of complexity than the most powerful classical computer. Problems that would take a state-of-the-art classical computer the age of our universe to solve, can, in theory, be solved by a universal quantum computer in hours.

What’s salient here is that the inherent probabilism of quantum computers make them even more fundamentally aligned with the true mathematics we’re representing with machine learning algorithms. TPUs, then, seem to exhibit a structure that best captures the mathematical operations of the algorithms, but exhibit the fatal flaw of being deterministic by essence: they’re still trafficking in the binary digits of 1s and 0s, even if they’re allocated in a different way. Quantum computing seems to bring back an analog computing paradigm, where we use aspects of physical phenomena to model the problem we’d like to solve. Quantum, of course, exhibits this special fragility where, should the balance of the system be disrupted, the probabilistic potential reverts down to the boring old determinism of 1s and 0s: a cat observed will be either dead or alive, as the harsh law of the excluded middle haunting our manifest image.

What, then, is the status of being of the math? I feel a risk of falling into Platonism, of assuming that a statement like “3 is prime” refers to some abstract entity, the number 3, that then gets realized in a lesser form as it is embodied on a CPU, GPU, or cup of coffee. It feels more cogent to me to endorse mathematical fictionalism, where mathematical statements like “3 is prime” tell a different type of truth than truths we tell about objects and people we can touch and love in our manifest world.****

My conclusion, then, is that radical creativity in machine learning-in any technology-may arise from our being able to abstract the formal mathematics from their substrate, to conceptually open up a liminal space where properties of equations have yet to take form. This is likely a lesson for our own identities, the freeing from necessity, from assumption, that enables us to come into the self we never thought we’d be.

I have a long way to go to understand this fully, and I’ll never understand it fully enough to contribute to the future of hardware R&D. But the world needs communicators, translators who eventually accept that close enough can be a place for empathy, and growth.

*This holds not only for writing, but for many types of doing, including creating a product. Agile methodologies help overcome the paralysis of uncertainty, the discomfort of not being ready yet. You commit to doing something, see how it works, see how people respond, see what you can do better next time. We’re always navigating various degrees of uncertainty, as Rich Sutton discussed on the In Context podcast. Sutton’s formalization of doing the best you can with the information you have available today towards some long-term goal, but learning at each step rather than waiting for the long-term result, is called temporal-difference learning.

**Split infinitive intentional.

***Who’s keeping score?

****That’s not to say we can’t love numbers, as Euler’s Identity inspires enormous joy in me, or that we can’t love fictional characters, or that we can’t love misrepresentations of real people that we fabricate in our imaginations. I’ve fallen obsessively in love with 3 or 4 imaginary men this year, creations of my imagination loosely inspired by the real people I thought I loved.

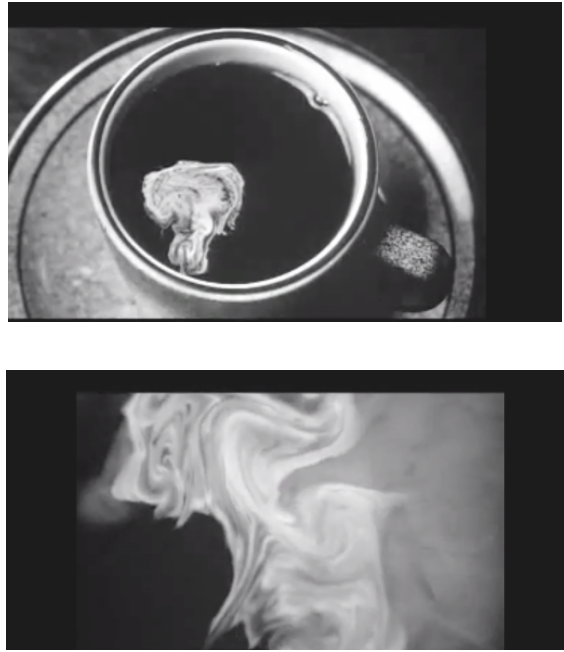

The image comes from this site, which analyzes themes in films by Darren Aronofsky. Maximilian Cohen, the protagonist of Pi, sees mathematical patterns all over the place, which eventually drives him to put a drill into his head. Aronofsky has a penchant for angst. Others, like Richard Feynman, find delight in exploring mathematical regularities in the world around us. Soap bubbles, for example, offer incredible complexity, if we’re curious enough to look.