There was another life that I might have had, but I am having this one. - Kazuo Ishiguro

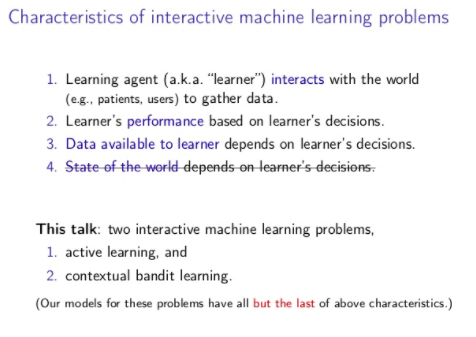

On April 18, 2016*, I attended an NYAI Meetup** featuring a talk by Columbia Computer Science Professor Dan Hsu on interactive learning. Incredibly clear and informative, the talk slides are worth reviewing in their entirety. But one in particular caught my attention (fortunately it summarizes many of the subsequent examples):

It’s worth stepping back to understand why this is interesting.

Much of the recent headline-grabbing progress in artificial intelligence (AI) comes from the field of supervised learning. As I explained in a recent HBR article, I find it helpful to think of supervised learning like the inverse of high school algebra:

Think back to high school math — I promise this will be brief — when you first learned the equation for a straight line: y = mx + b. Algebraic equations like this represent the relationship between two variables, x and y. In high school algebra, you’d be told what m and b are, be given an input value for x, and then be asked to plug them into the equation to solve for y. In this case, you start with the equation and then calculate particular values.

Supervised learning reverses this process, solving for m and b, given a set of x’s and y’s. In supervised learning, you start with many particulars — the data — and infer the general equation. And the learning part means you can update the equation as you see more x’s and y’s, changing the slope of the line to better fit the data. The equation almost never identifies the relationship between each x and y with 100% accuracy, but the generalization is powerful because later on you can use it to do algebra on new data. Once you’ve found a slope that captures a relationship between x and y reliably, if you are given a new x value, you can make an educated guess about the corresponding value of y.

Supervised learning works well for classification problems (spam or not spam? relevant or not for my lawsuit? cat or dog?) because of how the functions generalize. Effectively, the “training labels” humans provide in supervised learning assign categories, tokens we affiliate to abstractions from the glorious particularities of the world that enable us to perceive two things to be similar. Because our language is relatively stable (stable does not mean normative, as Canadian Inuit perceive snow differently from New Yorkers because they have more categories to work with), generalities and abstractions are useful, enabling the learned system to act correctly in situations not present in the training set (e.g., it takes a hell of a long time for golden retrievers to evolve to be indistinguishable from their great-great-great-great-great-grandfathers, so knowing what one looks like on April 18, 2016 will be a good predictor of what one looks like on December 2, 2017). But, as Rich Sutton*** and Andrew Barto eloquently point out in their textbook on reinforcement learning,

This is an important kind of learning, but alone it is not adequate for learning from interaction. In interactive problems it is often impractical to obtain examples of desired behavior that are both correct and representative of all the situations in which the agent has to act. In uncharted territory—where one would expect learning to be most beneficial—an agent must be able to learn from its own experience.

In his NYAI talk, Dan Hsu also mentioned a common practical limitation of supervised learning, namely that many companies often lack good labeled training data and it can be expensive, even in the age of Mechanical Turk, to take the time to provide labels.**** The core thing to recognize is that learning from generalization requires that future situations look like past situations; learning from interaction with the environment helps develop a policy for action that can be applied even when future situations do not look exactly like past situations. The maxim “if you don’t have anything nice to say, don’t say anything at all” holds both in a situation where you want to gossip about a colleague and in a situation where you want to criticize a crappy waiter at a restaurant.

In a supervised learning paradigm, there are certainly traps to make faulty generalizations from the available training data. One classic problem is called “overfitting”, where a model seems to do a great job on a training data set but fails to generalize well to new data. But the super critical salient difference Hsu points out in his talk is that, while with supervised learning the data available to the learner is exogenous to the system, with interactive machine learning approaches, the learner’s performance is based on the learner’s decisions and the data available to the world depends on the learner’s decisions.

Think about that. Think about what that means for gauging the consequences of decisions. Effectively, these learners cannot evaluate counterfactuals: they cannot use data or evidence to judge what would have happened if they took a different action. An ideal optimization scenario, by contrast, would be one where we could observe the possible outcomes of any and all potential decisions, and select the action with the best outcome across all these potential scenarios (this is closer, but not identical, to the spirit of variational inference, but that is a complex topic for another post).

To share one of Hsu’s***** concrete examples, let’s say a website operator has a goal to personalize website content to entice a consumer to buy a pair of shoes. Before the user shows up at the site, our operator has some information about her profile and browsing history, so can use past actions to guess what might be interesting bait to get a click (and eventually a purchase). So, at the moment of truth, the operator says “Let’s show the beige Cole Hann high heels!”, displays the content, and observes the reaction. We’ll give the operator the benefit of the doubt and assume the user clicks, or even goes on to purchase. Score! Positive signal! Do that again in the future! But was it really the best choice? What would have happened if the operator had shown the manipulatable consumer the red Jimmy Choo high heels, which cost $750 per pair rather than a more modest $200 per pair? Would the manipulatable consumer have clicked? Was this really the best action?

The learner will never know. It can only observe the outcome of the action it took, not the action it didn’t take.

The literature refers to this dilemma as the trade-off between exploration and exploitation. To again cite Sutton and Barto:

One of the challenges that arise in reinforcement learning, and not in other kinds of learning, is the trade-off between exploration and exploitation. To obtain a lot of reward, a reinforcement learning agent must prefer actions that it has tried in the past and found to be effective in producing reward. But to discover such actions, it has to try actions that it has not selected before. The agent has to exploit what it already knows in order to obtain reward, but it also has to explore in order to make better action selections in the future. The dilemma is that neither exploration nor exploitation can be pursued exclusively without failing at the task. The agent must try a variety of actions and progressively favor those that appear to be best. On a stochastic task, each action must be tried many times to gain a reliable estimate of its expected reward.

There’s a lot to say about the exploration-exploitation tradeoff in machine learning (I recommend starting with the Sutton/Barto textbook). Now that I’ve introduced the concept, I’d like to pivot to consider where and why this is relevant in honest-to-goodness-real-life.

The nice thing about being an interactive machine learning algorithm as opposed to a human is that algorithms are executors, not designers or managers. They’re given a task (“optimize revenues for our shoe store!”) and get to try stuff and make mistakes and learn from feedback, but never have to go through the soul-searching agony of deciding what goal is worth achieving. Human designer overlords take care of that for them. And even the domain and range of possible data to learn from is constrained by technical conditions: designers make sure that it’s not all the data out there in the world that’s used to optimize performance on some task, but a tiny little baby subset (even if that tiny little baby entails 500 million examples) confined within a sphere of relevance.

Being a human is unfathomably more complicated.

Many choices we make benefit from the luxury of triviality and frequency. “Where should we go for dinner and what should we eat when we get there?” Exploitation can be a safe choice, in particular for creatures of habit. “Well, sweetgreen is around the corner, it’s fast and reliable. We could take the time to review other restaurants (which could lead to the most amazing culinary experience of our entire lives!) or we could not bother to make the effort, stick with what we know, and guarantee a good meal with our standard kale caesar salad, that parmesan crisp thing they put on the salad is really quite tasty…” It’s not a big deal if we make the wrong choice because, low and behold, tomorrow is another day with another dinner! And if we explore something new, it’s possible the food will be just terrible and sometimes we’re really not up for the risk, or worse, the discomfort or shame of having to send something we don’t like back. And sometimes it’s fine to take the risk and we come to learn we really do love sweetbreads, not sweetgreens, and perhaps our whole diet shifts to some decadent 19th-century French paleo practice in the style of des Esseintes.

Other choices have higher stakes (or at least feel like they do) and easily lead to paralysis in the face of uncertainty. Working at a startup strengthens this muscle every day. Early on, founders are plagued by an unknown amount of unknown unknowns. We’d love to have a magic crystal ball that enables us to consider the future outcomes of a range of possible decisions, and always act in the way that guarantees future success. But the crystal balls don’t exist, and even if they did, we sometimes have so few prior assumptions to prime the pump that the crystal ball could only output an #ERROR message to indicate there’s just not enough there to forecast. As such, the only option available is to act and to learn from the data provided as a result of that action. To jumpstart empiricism, staking some claim and getting as comfortable as possible with the knowledge that the counterfactual will never be explored, and that each action taken shifts the playing field of possibility and probability and certainty slightly, calming minds and hearts. The core challenge startup leaders face is to enable the team to execute as if these conditions of uncertainty weren’t present, to provide a safe space for execution under the umbrella of risk and experiment. What’s fortunate, however, is that the goals of the enterprise are, if not entirely well-defined, at least circumscribed. Businesses exist to turn profits and that serves as a useful, if not always moral, constraint.

Big personal life decisions exhibit further variability because we but rarely know what to optimize for, and it can be incredibly counter-productive and harmful to either constrain ourselves too early or suffer from the psychological malaise of assuming there’s something wrong with us if we don’t have some master five-year plan.

This human condition is strange because we do need to set goals-it’s beneficial for us to consider second- and third-tier consequences, i.e., if our goal is to be healthy and fit, we should overcome the first-tier consequence of receiving pleasure when we drown our sorrows in a gallon of salted caramel ice cream-and yet it’s simply impossible for us to imagine the future accurately because, well, we overfit to our present and our past.

I’ll give a concrete example from my own experience. As I touched upon in a recent post about transitioning from academia to business, one reason why it’s so difficult to make a career change is that, while we never actually predict the future accurately, it’s easier to fear loss from a known predicament than to imagine gain from a foreign predicament.****** Concretely, when I was deciding whether to pursue a career in academia or the private sector in the fifth year in graduate school, I erroneously assumed that I was making a strict binary choice, that going into business meant forsaking a career teaching or publishing. As I was evaluating my decision, I never in my wildest dreams imagined that, a mere two years later, I would be invited to be an adjunct professor at the University of Calgary Faculty of Law, teaching about how new technologies were impacting traditional professional ethics. And I also never imagined that, as I gave more and more talks, I would subsequently be invited to deliver guest lectures at numerous business schools in North America. This path is not necessarily the right path for everyone, but it was and is the right path for me. In retrospect, I wish I’d constructed my decision differently, shifting my energy from fearing an unknown and unknowable future to paying attention to what energized me and made me happy and working to maximize the likelihood of such energizing moments occurring in my life. I still struggle to live this way, still fetishize what I think I should be wanting to do and living with an undercurrent of anxiety that a choice, a foreclosure of possibility, may send me down an irreconcilably wrong path. It’s a shitty way to be, and something I’m actively working to overcome.

So what should our policy be? How can we reconcile this terrific trade-off between exploration and exploitation, between exposing ourselves to something radically new and honing a given skill, between learning from a stranger and spending more time with a loved one, between opening our mind to some new field and developing niche knowledge in a given domain, between jumping to a new company with new people and problems, and exercising our resilience and loyalty to a given team?

There is no right answer. We’re all wired differently. We all respond to challenges differently. We’re all motivated by different things.

Perhaps death is the best constraint we have to provide some guidance, some policy to choose between choice A and choice B. For we can project ourselves forward to our imagined death bed, where we lie, alone, staring into the silent mirror of our hearts, and ask ourselves “Was my life was meaningful?” But this imagined scene is not actually a future state: it is a present policy. It is a principle we can use to evaluate decisions, a principle that is useful because it abstracts us from the mire of emotions overly indexed towards near-term goals and provides us with perspective.

And what’s perhaps most miraculous is that, at every present, we can sit there are stare into the silent mirror of our hearts and look back on the choices we’ve made and say, “That is me.” It’s so hard going forward, and so easy going backward. The proportion of what may come wanes ever smaller than the portion of what has been, never quite converging until it’s too late, and we are complete.

*Thank you, internet, for enabling me to recall the date with such exacting precision! Using my memory, I would have deduced the approximate date by 1) remembering that Robert Colpitts, my boyfriend at the time (Godspeed to him today, as he participates in a sit-a-thon fundraiser for the Interdependence Project in New York City, a worthy cause), attended with me, recalling how fresh our relationship was (it had to have been really fresh because the frequency with which we attended professional events together subsequently declined), and working backwards from the start to find the date; 2) remembering what I wore! (crazy!!), namely a sheer pink sleeveless shirt, a pair of wide-legged white pants that landed just slightly above the ankle and therefore looked great with the pair of beige, heeled sandals with leather so stiff it gave me horrific blisters that made running less than pleasant for the rest of the week. So I’d recently purchased those when my brother and his girlfriend visited, which was in late February (or early March?) 2016; 3) remembering that afterwards we went to some fast food Indian joint nearby in the Flatiron district, food was decent but not good enough to inspire me to return. So that would put is in the March-April, 2016 range, which is close but not the exact April 18. That’s one week after my birthday (April 11); I remember Robert and I had a wonderful celebration on my birthday. I felt more deeply cared for than I had in any past birthdays. But I don’t remember this talk relative to the birthday celebration (I do remember sending the marketing email to announce the Fast Forward Labs report on text summarization on my birthday, when I worked for half day and then met Robert at the nearby sweetgreen, where he ordered, as always, (Robert is a creature of exploitation) the kale caesar salad, after which we walked together across the Brooklyn Bridge to my house, we loved walking together, we took many, many walks together, often at night after work at the Promenade, often in the morning, before work, at the Promenade, when there were so few people around, so few people awake). I must say, I find the process of reconstructing when an event took place using temporal landmarks much more rewarding than searching for “Dan Hsu Interactive Learning NYAI” on Google to find the exact date. But the search terms themselves reveal something equally interesting about our heuristic mnemonics, as every time we reconstruct some theme or topic to retrieve a former conversation on Slack.

**Crazy that WeWork recently bought Meetup, although interesting to think about how the two business models enable what I am slowly coming to see as the most important creative force in the universe, the combinatory potential of minds meeting productively, where productively means that each mind is not coming as a blank slate but as engaged in a project, an endeavor, where these endeavors can productively overlap and, guided by a Smithian invisible hand, create something new. The most interesting model we hope to work on soon at integrate.ai is one that optimizes groups in a multiplayer game experience (which we lovingly call the polyamorous online dating algorithm), so mapping personality and playing style affinities to dynamically allocate the best next player to an alliance. Social compatibility is a fascinating thing to optimize for, in particular when it goes beyond just assembling a pleasant cocktail party to pairing minds, skills, and temperaments to optimize the likelihood of creating something beautiful and new.

***Sutton has one of the most beautiful minds in the field and he is kind. He is a person to celebrate. I am grateful our paths have crossed and thoroughly enjoyed our conversation on the In Context podcast.

***Maura Grossman and Gordon Cormack have written countless articles about the benefits of using active learning for technology assisted review (TAR), or classifying documents for their relevance for a lawsuit. The tradeoffs they weigh relate to system performance (gauged by precision and recall on a document set) versus time, cost, and effort to achieve that performance.

*****Hsu did not mention Haan or Choo. I added some more color.

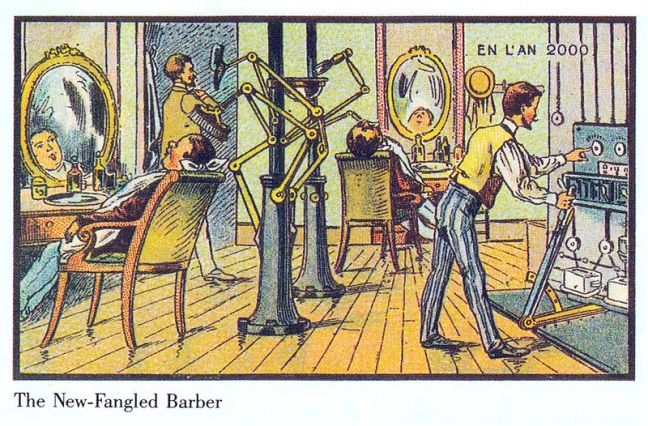

******Note this same dynamic occurs in our current fears about the future economy. We worry a hell of a lot more about the losses we will incur if artificial intelligence systems automate existing jobs than we celebrate the possibilities of new jobs and work that might become possible once these systems are in place. This is also due to the fact that the future we imagine tends to be an adaptation of what we know today, as delightfully illustrated in Jean-Marc Côté’s anachronistic cartoons of the year 2000. The cartoons show what happens when our imagination only changes one variable as opposed to a set of holistically interconnected variables.

The featured image is a photograph I took of the sidewalk on State Street between Court and Clinton Streets in Brooklyn Heights. I presume a bird walked on wet concrete. Is that how those kinds of footprints are created? I may see those footprints again in the future, but not nearly as soon as I’d be able to were I not to have decided to move to Toronto in May. Now that I’ve thought about them, I may intentionally make the trip to Brooklyn next time I’m in New York (certainly before January 11, unless I die between now and then). I’ll have to seek out similar footprints in Toronto, or perhaps the snows of Alberta.

Wow! As always a romp through philosophical and technological issues I did not know existed. Thanks for blowing my mind and expanding my vocabulary. I love your description of the startup mindset. It makes you really admire the courage of those who dare Step onto that stage, a few of whom I know. Also I could not help but think of the struggles of children with autism and the especially strong preference for the familiar, the tried and true, and how, following your analysis, that preference for exploitation of known quantities can upset the balance of exploitation vs exploration. Very interesting!

LikeLiked by 1 person