One of the main arguments the Israeli historian Yuval Noah Harari makes in Sapiens: A Brief History of Humankind is that mankind differs from other species because we can cooperate flexibly in large numbers, united in cause and spirit not by anything real, but by the fictions of our collective imagination. Examples of these fictions include gods, nations, money, and human rights, which are supported by religions, political structures, trade networks, and legal institutions, respectively.* | **

As an entrepreneur, I’m increasingly appreciative of and fascinated by the power of collective fictions. Building a technology company is hard. Like, incredibly hard. Lost deals, fragile egos, impulsive choices, bugs in the code, missed deadlines, frantic sprints to deliver on customer requests, the doldrums of execution, any number of things can temper the initial excitement of starting a new venture. Mission is another fiction required to keep a team united and driven when the proverbial shit hits the fan. While a strong, charismatic group of leaders is key to establishing and sustaining a company mission, companies don’t exist in a vacuum: they exist in a market, and participate in the larger collective fictions of the Zeitgeist in which the operate. The borders are fluid and porous, and leadership can use this porousness to energize a team to feel like they’re on the right track, like they’re fighting the right battle at the right time.

These days, for example, it is incredibly energizing to work for a company building software products with artificial intelligence (AI). At its essence, AI is shorthand for products that use data to provide a service or insight to a user (or, as I argued in a previous post, AI is whatever computers cannot do until they can). But there wouldn’t be so much frenzied fervor around AI if it were as boring as building a product using statistics and data. Rather, what’s exciting the public are the collective fictions we’re building around what AI means-or could mean, or should mean-for society. It all becomes a lot more exciting when we think about AI as computers doing things we’ve always thought only humans can do, when they start to speak, write, or even create art like we do, when we no longer have to adulterate and contort our thoughts and language to speak Google or speak Excel, going from the messy fluidity of communication to the terse discreteness of structured SQL.

The problem is that some of our collective fictions about AI, exciting though they may be, are distracting us from the real problems AI can and should be used to solve, as well as some of the real problems AI is creating-and will only exacerbate-if we’re not careful. In this post, I cover my top five distractions in contemporary public discourse around AI. I’m sure there are many more, and welcome you to add to this list!

Distraction 1: The End of Work

Anytime he hears rumblings that AI is going to replace the workforce as we know it, my father, who has 40 years of experience in software engineering, most recently in natural language processing and machine learning, placidly mentions Desk Set, a 1957 romantic comedy featuring the always lovable Spencer Tracy and Katharine Hepburn. Desk Set features a group of librarians at a national broadcasting network who fear their job security when an engineer is brought in to install EMERAC (named after IBM’s ENIAC), an “electronic brain” that promises to do a better job fielding consumer trivia questions than they do. The film is both charming and prescient, and will seem very familiar to anyone reading about a world without work. The best scene features a virtuoso feat of associative memory showing the sheer brilliance of the character played by Katharine Hepburn (winning Tracy’s heart in the process), a brilliance the primitive electronic brain would have no chance of emulating. The movie ends with a literal deus ex machina where a machine accidentally prints pink slips to fire the entire company, only to get shut down due to its rogue disruption on operations.

The Desk Set scene where Katharine Hepburn shows a robot is no match for her intelligence.

Desk Set can teach us a lesson. The 1950s saw the rise of energy around AI. In 1952, Claude Shannon introduced Theseus, his maze-solving mouse (an amazing feat in design). In 1957, Frank Rosenblatt built his Mark I Perceptron-the grandfather of today’s neural networks. In 1958, H.P. Luhn wrote an awesome paper about business intelligence that describes an information management system we’re still working to make possible today. And in 1959, Arthur Samuel coined the term machine learning upon release of his checkers-playing program in 1959 (Tom Mitchell has my favorite contemporary manifesto on what machine learning is and means). The world was buzzing with excitement. Society was to be totally transformed. Work would end, or at least fundamentally change to feature collaboration with intelligent machines.

This didn’t happen. We hit an AI winter. Deep learning was ridiculed as useless. Technology went on to change how we work and live, but not as the AI luminaries in the 1950s imagined. Many new jobs were formed, and no one in 1950 imagined a Bay Area full of silicon transistors, and, later, adolescent engineers making millions off mobile apps. No one imagined Mark Zuckerberg. No one imagined Peter Thiel.

We need to ask different questions and address different people and process challenges to make AI work in the enterprise. I’ve seen the capabilities of over 100 large enterprises over the past two years, and can tell you we have a long way to go before smart machines outright replace people. AI products, based on data and statistics, produce probabilistic outputs whose accuracy and performance improve with exposure to more data over time. As Amos Tversky says, “man is a deterministic device thrown into a probabilistic universe.” People mistake correlation for cause. They prefer deterministic, clear instructions to uncertainties and confidence rates (I adore the first few paragraphs of this article, where Obama throws his hands up in despair after being briefed on the likely location of Osama bin Laden in 2011). Law firm risk departments, as Intapp CEO John Hall and I recently discussed, struggle immensely to break the conditioning of painstaking review to identify a conflict or potential piece of evidence, habits that must be broken to take advantage of the efficiencies AI can provide (Maura Grossman and Gordon Cormack have spent years marshaling evidence to show humans are not as thorough as they think, especially with the large volumes of electronic information we process today).

The moral of the story is, before we start pontificating about the end of work, we should start thinking about how to update our workforce mental habits to get comfortable with probabilities and statistics. This requires training. It requires that senior management make decisions about their risk tolerance for uncertainty. It requires that management decide where transparency is required (situations where we know why the algorithm gave the answer it did, as in consumer credit) and where accuracy and speed are more important (as in self-driving cars, where it’s critical to make the right decision to save lives, and less important that we know why that decision was made). It requires an art of figuring out where to put a human in the loop to bootstrap the data required for future automation. It requires a lot of work, and is creating new consulting and product management jobs to address the new AI workplace.

Distraction 2: Universal Basic Income

Universal basic income (UBI), a government program where everyone, at every income level in society, receives the same stipend of money on a regular basis (Andy Stern, author of Raising the Floor, suggests $1,000 per month per US citizen), is a corollary of the world without work. UBI is interesting because it unites libertarians (be they technocrats in Silicon Valley or hyper-conservatives like Charles Murray, who envisions a Jeffersonian ideal of neighbors supporting neighbors with autonomy and dignity) with socialist progressives (Andy Stern is a true man of the people, who lead the Service Employee International Union for years). UBI is attracting attention from futurists like Peter Diamandis because they see it as a possible source of income in the impending world without work.

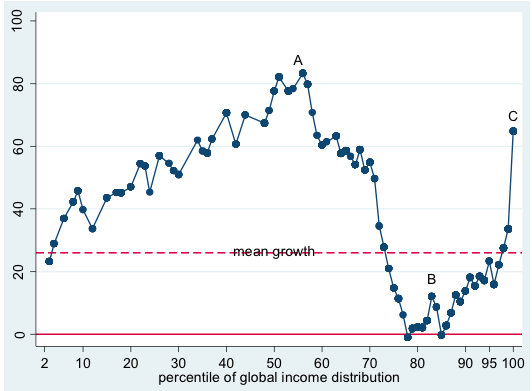

UBI is a distraction from a much more profound economic problem being created by our current global, technology-driven economy: income inequality. We all know this is the root cause of Trumpism, Brexit, and many of the other nationalist, regressive political movements at play across the world today. It is critical we address it. It’s not simple, as it involves a complex interplay of globalization, technology, government programs, education, the stigma of vocational schools in the US, etc. In the Seventh Sense, Joshua Cooper Ramo does a decent job explaining how network infrastructure leads to polarizing effects, concentrating massive power in the hands of a few (Google, Facebook, Amazon, Uber) and distributing micro power and expression to the many (the millions connected on these platforms). As does Nicholas Bloom in this HBR article about corporations in the age of inequality. The economic consequences of our networked world can be dire, and must be checked by thinking and approaches that did not exist in the 20th century. Returning to mercantilism and protectionism is not a solution. It’s a salve that can only lead to violence.

That said, one argument for UBI my heart cannot help but accept is that it can restore dignity and opportunity for the poor. Imagine if every day you had to wait in lines at the DMV or burn under the alienating fluorescence of airport security. Imagine if, to eat, you had to wait in lines at food pantries, and could only afford unhealthy food that promotes obesity and diabetes. Imagine how much time you would waste, and how that time could be spent to learn a new skill or create a new idea! The 2016 film I, Daniel Blake is a must see. It’s one of those movies that brings tears to my eyes just thinking of it. You watch a kind, hard-working, honest man go through the ringer of a bureaucratic system, pushed to the limits of his dignity before he eventually rebels. While UBI is not the answer, we all have a moral obligation, today, to empathize with those who might not share our political views because they are scared, and want a better life. They too have truths to tell.

Distraction 3: Conversational Interfaces

Just about everyone can talk; very few people have something truly meaningful and interesting to say.

The same holds for chatbots, or software systems whose front end is designed to engage with an end user as if it were another human in conversation. Conversational AI is extremely popular these days for customer service workflows (a next-generation version of recorded options menus for airline, insurance, banking, or utilities companies) or even booking appointments at the hair salon or yoga studio. The principles behind conversational AI are great: they make technology more friendly, enable technophobes like my grandmother to benefit from internet services as she shouts requests to her Amazon Alexa, and promise immense efficiencies for businesses that serve large consumer bases by automating and improving customer service (which, contrary to my first point about the end of work, will likely impact service departments significantly).

The problem, however, is that entrepreneurs seeking the next sexy AI product (or heads of innovation in large enterprises pressed to find a trojan horse AI application to satisfy their boss and secure future budget) get so caught up in the excitement of building a smart bot that they forget that being able to talk doesn’t mean you have anything useful or intelligent to say. Indeed, at Fast Forward Labs, we’ve encountered many startups so excited by the promise of conversational AI that they neglect the less sexy but incontrovertibly more important backend work of building the intelligence that powers a useful front end experience. This work includes collecting, cleaning, processing, and storing data that can be used to train the bot. Scoping and understanding the domain of questions you’d like to have your bot answer (booking appointments, for example, is a good domain because it’s effectively a structured data problem: date, time, place, hair stylist, etc.). Building out recommendation algorithms to align service to customer if needed. Designing for privacy. Building out workflow capabilities to escalate to a human in the case of confusion or route for future service fulfillment. Etc…

The more general point I’m making with this example is that AI is not magic. These systems are still early in their development and adoption, and very few off the shelf capabilities exist. In an early adopter phase, we’re still experimenting, still figuring out bespoke solutions on particular data sets, still restricting scope so we can build something useful that may not be nearly as exciting as our imagination desires. When she gives talks about the power of data, my colleague Hilary Mason frequently references Google Maps as a paradigmatic data product. Why? Because it’s boring! The front end is meticulously designed to provide a useful, simple service, hiding the immense complexity and hard work that powers the application behind the scenes. Conversation and language are not always the best way to present information: the best AI applications will come from designers who use judgment to interweave text, speech, image, and navigation through keys and buttons.

Distraction 4: Existential Risk

Enlightenment is man’s emergence from his self-imposed nonage. Nonage is the inability to use one’s own understanding without another’s guidance. This nonage is self-imposed if its cause lies not in lack of understanding but in indecision and lack of courage to use one’s own mind without another’s guidance. Dare to know! (Sapere aude.) “Have the courage to use your own understanding,” is therefore the motto of the enlightenment.

This is the first paragraph of Emmanuel Kant’s 1784 essay Answering the Question: What is Enlightenment? (It’s short, and very well worth the read.) I cite it because contemporary discourse about AI becoming an existential threat reminds me of a regression back to the Middle Ages, where Thomas Aquinas and Wilhelm von Ockham presented arguments on the basis of priority authority: “This is true because Aristotle once said that…” Descartes, Luther, Diderot, Galileo, and the other powerhouses of the Enlightenment thought this was rubbish and led to all sorts of confusion. They toppled the old guard and placed authority in the individual, each and every one of us born with the same rational capabilities to build arguments and arrive at conclusions.

Such radical self reliance has waxed and waned throughout history, the Enlightenment offset by the pulsing passion of Romanticism, only to be resurrected in the more atavistic rationality of Thoreau or Emerson. It seems that the current pace of change in technology and society is tipping the scales back towards dependence and guidance. It’s so damn hard to keep up with everything that we can’t help but relinquish judgment to the experts. Which means, if Bill Gates, Stephen Hawking, and Elon Musk, the priests of engineering, science, and ruthless entrepreneurship, all think that AI is a threat to the human race, then we mere mortals may as well bow down to their authority. Paternalistic, they must know more than we.

The problem here is that the chicken-little logic espoused by the likes of Nick Bostrom-where we must prepare for the worst of all possible outcomes-distracts us from the real social issues AI is already exacerbating. These real issues are akin to former debates about affirmative action, where certain classes, races, and identities receive preferential treatment and opportunity to the detriment and exclusion of others. An alternative approach to the ethics of AI, however, is quickly gaining traction. The Fairness, Accountability, and Transparency in Machine Learning movement focuses not on rogue machines going amok (another old idea, this time from Goethe’s 1797 poem The Sorcerer’s Apprentice), but on understanding how algorithms perpetuate and amplify existing social biases and doing something to change that.

There’s strong literature focused on the practical ethics of AI. A current Fast Forward Labs intern just published a post about a tool called FairML, which he used to examine implicit racial bias in criminal sentencing algorithms. Cathy O’Neill regularly writes articles about the evils of technology for Bloomberg (her rhetoric can be very strong, and risks alienating technologists or stymying buy in from pragmatic moderates). Gideon Mann, who leads data science for Bloomberg, is working on a Hippocratic oath for data scientists. Blaise Agüera y Arcas and his team at Google are constantly examining and correcting for potential bias creeping into their algorithms. Clare Corthell is mobilizing practitioners in San Francisco to discuss and develop ethical data science practices. The list goes on.

Designing ethical algorithms will be a marathon, not a sprint. Executive leadership at large enterprise organizations are just wrapping their heads around the financial potential of AI. Ethics is not their first concern. I predict the dynamics will resemble those in information security, where fear of a tarred reputation spurs corporations to act. It will be interesting to see how it all plays out.

Distraction 5: Personhood

The language used to talk about AI and the design efforts made to make AI feel human and real invite anthropomorphism. Last November, I spoke on a panel at a conference Google’s Artists and Machine Intelligence group hosted in Paris. It was a unique event because it brought together highly technical engineers and highly non-technical artists, which was a wonderful staging ground to see how people who don’t work in machine learning understand, interpret, and respond to the language and metaphors engineers use to describe the vectors and linear algebra powering machines. Sometimes this is productive: artists like Ross Goodwin and Kyle McDonald deliberately play with the idea of relinquishing autonomy over to a machine, liberating the human artist from the burden of choice and control, and opening the potential for serendipity as a network shuffles the traces of prior human work to create something radical, strange, and new. Sometimes this is not productive: one participant, upon learning that Deep Dream is actually an effort to interpret the black box impenetrability of neural networks, asked if AI might usher a new wave of Freudian psychoanalysis. (This stuff tries my patience.) It’s up for debate whether artists can derive more creativity from viewing an AI as an autonomous partner or an instrument whose pegs can be tuned like the strings of a guitar to change the outcome of the performance. I think both means of understanding the technology are valid, but ultimately produce different results.

The general point here is that how we speak about AI changes what we think it is and what we think it can or can’t do. Our tendencies to anthropomorphize what are only matrices multiplying numbers as determined by some function is worthy of wonder. But I can’t help but furrow my brow when I read about robots having rights like humans and animals. This would all be fine if it were only the path to consumer adoption, but these ideas of personhood may have legal consequences for consumer privacy rights. For example, courts are currently assessing whether the police have the right to information about a potential murder collected from Amazon Echo (privacy precedent here comes from Katz v. United States, the grandfather case in adapting the Fourth Amendment to our new digital age).

Joanna Bryson at the University of Bath and Princeton (following S. M. Solaiman) has proposed one of the more interesting explanations for why it doesn’t make sense to imbue AI with personhood: “AI cannot be a legal person because suffering in well-designed AI is incoherent.” Suffering, says Bryson, is integral to our intelligence as social species. The crux of her argument is that we humans understand ourselves not as discrete monads or brains in a vat, but as essentially and intrinsically intertwined with other humans around us. We play by social rules, and avoid behaviors that lead to ostracism and alienation from the groups we are part of. We can construct what appears to be an empathetic response in robots, but we cannot construct a self-conscious, self-aware being who exercises choice and autonomy to pursue reward and recognition, and avoid suffering (perhaps reinforcement learning can get us there: I’m open to be convinced otherwise). This argument goes much deeper than business articles arguing that work requiring emotional intelligence (sales, customer relationships, nursing, education, etc.) will be more valued than quantitive and repetitive work in the future. It’s an incredibly exciting lens through which to understand our own morality and psychology.

Conclusion

As mentioned at the beginning of this post, collective fictions are the driving force of group alignment and activity. They are powerful, beautiful, the stuff of passion and motivation and awe. The fictions we create about the potential of AI may just be the catalyst to drive real impact throughout society. That’s nothing short of amazing, as long as we can step back and make sure we don’t forgot the hard work required to realize these visions, and the risks we have to address along the way.

* Sam Harris and a16z recently interviewed Harari on their podcasts. Both of these podcasts are consistently excellent.

**One of my favorite professors at Stanford, Russell Berman, argued something similar in Fiction Sets You Free. Berman focuses more on the liberating power to use fiction to imagine a world and political situation different from the present conditions. His book also comments of the unique historicity of fiction, where works at different period refer back to precedents and influencers from the past.

Thanks for a brilliant effort, Katie - as usual. Well articulated and leaving much content to digest long after the first read. But of course I must take issue with UBI, especially, “…one argument for UBI my heart cannot help but accept is that it can restore dignity and opportunity for the poor.” Since the first coin was minted, despite countless attempts to eradicate poverty by handing our money, poverty remains. And the reason is logical. The forces that drive some to create the amazing AI of which you write are the same dynamics that create an economy in which AI scientists and dishwashers must co-exist. The Utopian view that these tasks should be compensated equally is folly, and the stuff a long history of human wreckage. UBI is only the latest marketing spin on a tired and failed scheme to redistribute wealth. It is perhaps the most enduring “fiction” of a fictional collective imagination.

LikeLike

Thanks for your comments, Gary. To be clear, I consider UBI to be one of the distractions diverting our attention away from the real issue of income inequality. Pragmatically, it would be an extremely expensive effort that doesn’t make arithmetic sense without huge tax increases (which people won’t accept) or redistributing already precious resources to people who don’t even need them! I’d greatly prefer redirecting the oodles of money devoted to defense to our education system. Providing people with equal education opportunities is the soundest path to long-term wealth redistribution. These days, that’s not only for young kids, but also for people who need a skills reset at later points in their career (as the half-life of knowledge and skills shortens with the increasing pace of technology development). The US sucks at that. Sucks. Totally sucks.

Unlike you, I think we need to take unequal distribution of wealth seriously, as opposed to viewing it as the natural consequence of the value of different types of work. I’m no expert in this, but I remember, when reading Karl Marx in college, learning that the reason the global uprising of the proletariat did not occur as Marx predicted was that the middle class ironed out the vast inequalities that existed at the beginning of the industry revolution. Can the invisible hand do the same for us this time around? Or do we need to do something?

LikeLike

Your section on UBI should be rethought. You said UBI is a distraction from addressing inequality (the very thing it is trying to address as a potential solution). You then say “While UBI is not the answer”, but never explained why it is not the answer.

The first section also reads weirdly. “The moral of the story is, before we start pontificating about the end of work, we should start thinking about how to update our workforce mental habits to get comfortable with probabilities and statistics.” Isn’t this exactly “pontificating about the end of work”, by thinking about potential mitigations like education?

Some of the other points you make are valid, but your overarching argument for these things being distractions is weak. I’d much rather read about what you think the hard work required is that you mentioned in the conclusion.

LikeLike

Thanks for your comments, and sorry it took so long to respond.

As regards education, I do not think that work evolving is the same thing as work ending. It’s certainly the case the new AI technologies - and other technologies - are going to change how we work. There will be new man-machine partnerships, in particular as companies do the work to collect training data for supervised algorithms. But learning how to engage with probabilistic systems is not the same thing as having those systems flat out replace people in jobs. It’s the idea that work by humans stops that I’m arguing against. We have a long way to go in most verticals. Some, like truck driving, are more susceptible than others.

Also, what I try to say in my article is that income inequality is a massive problem and one that we need to fix. But I don’t think UBI is the solution, at least in the US. The reason for that is arithmetic. To provide a measly uptick of $1,000/month (it would be horrible to live in the Bay Area or NYC on that little money), we need to expand the national budget by more than a trillion dollars. Where does that money come from, if no one will want to pay more taxes? And why do we redirect resources away from educational programs - which are so crucial to help curb long-term income inequality gaps - to rich people who don’t need the basic income support?

Income inequality is a massive, systemic problem of the global capitalist system. And we must do something. But it’s going to take a lot of complex thinking to find the right fix. I don’t think UBI is it.

I could be wrong, and am happy to be convinced otherwise!

LikeLike